AI Coding: From Vibes to Proofs

Ryan Rapp

Ryan Rapp

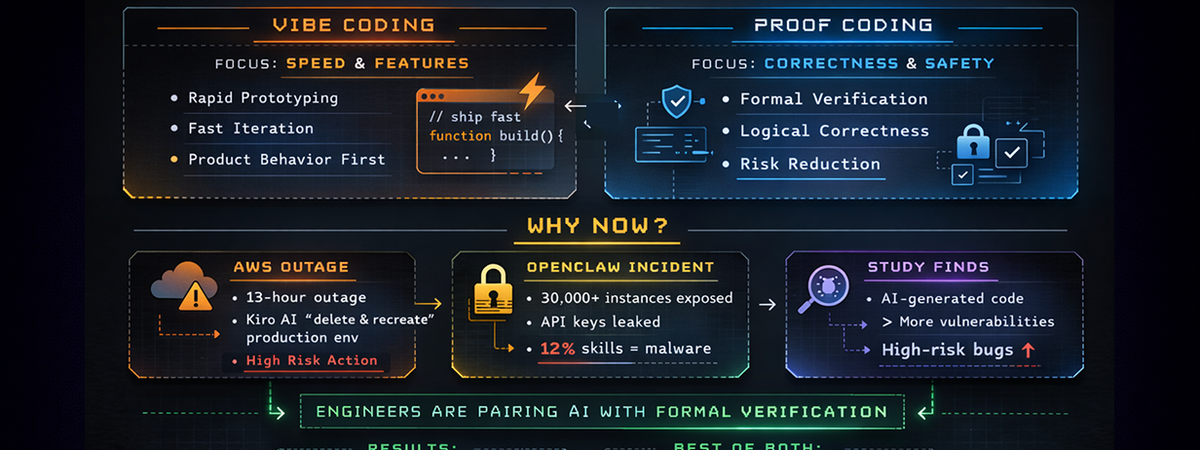

After seeing worrying trends - AWS suffering a 13-hour outage when its Kiro AI agent decided to "delete and recreate" a production environment, OpenClaw exposing over 30,000 instances with leaked API keys and 12% of its skills marketplace found to be malware, and a large-scale study finding AI-generated code contains more high-risk vulnerabilities than human-written code - I've noticed an encouraging counter-trend:

Engineers pairing AI coding with formal verification.

I define "Proof Coding" as utilizing formal verification tools as part of AI coding in order to create logically correct software in ways that would have been impractical prior to AI. I've incorporated it into my own work with encouraging results.

It is a natural complement to Vibe Coding, which prioritizes speed and product behaviors. Instead, Proof Coding prioritizes correctness - reducing risk and allowing teams to work more quickly in the long run.

These incidents shouldn't make us skeptical of AI coding broadly. They should make us skeptical of our current practices around how engineers interface with AI.

How We Got Here

At the start of AI coding, it was undoubtedly more profitable for OpenAI, Google, Anthropic, and others to build for a large base of enthusiasts than for a smaller group of experts - the latter are smaller in number and started out dismissive of AI. Accordingly, just as Internet enthusiasm was the original shaping force of JavaScript, it's the Vibe Coding enthusiasts that have deeply influenced today’s AI coding products. The trial-and-error style of mass adoption has brought countless rapid advances, creating the biggest shift in software development since the dawn of the Internet. While AI labs’ groundbreaking work creating extraordinarily capable models cannot be understated, it is Vibe Coding enthusiasts who have popularized and shaped the tools into the fire-and-forget patterns of increased AI autonomy and usefulness. The latest trend of Vibe Coding is OpenClaw, an autonomous self-hosted agent with impressive assistant capabilities paired with well-known security vulnerabilities.

The early stages of the World Wide Web have parallels to Vibe Coding beyond just the pace of adoption. In the early stages, JavaScript had many quirks and bugs that were difficult to patch without breaking websites. Each time a new browser was released, people would rollback to prior versions, so they could do everyday things such as book flights or check their bank balance. However, over time, W3C standards and new languages like TypeScript brought rigor to the wild west of JavaScript. Similarly, new AI <-> engineer modalities are emerging as experts develop new ways of working with AI.

One such alternative way for engineers to work with AI is a pattern I am calling Proof Coding.

What is Proof Coding?

I define “Proof Coding” as utilizing formal verification tools as part of AI coding in order to create logically correct software in ways that would have been impractical prior to AI.

Mathematical proofs offer an example of how proof coding can work. In math, theorems are often proved constructively: you describe the properties a solution must satisfy, then show your candidate meets all of them. Proof Coding works the same way - the engineer clearly defines formal properties the solution must satisfy and provides a method to validate correctness.

Vibe Coding gives AI an aesthetic target - an interaction or set of behaviors - validated quickly by anyone with acceptably good taste. Proof Coding gives AI a mathematical target. Instead of describing a library in terms of modules or algorithms, you describe it in terms of algebraic properties and structural invariants, then let the AI design, implement, and verify the result.

For example, you might specify:

- Identity: merge(A, ∅) = A - merging with an empty state returns the original

- Commutativity: merge(A, B) = merge(B, A) - order of merging doesn't matter

- Idempotency: merge(A, A) = A - merging something with itself is a no-op

- Convergence: for any set of replicas {R₁, R₂, ..., Rₙ} that have received the same updates in any order, all replicas arrive at the same state

These are the properties of a Conflict-Free Replicated Data Type (CRDT) - the kind of structure that powers real-time collaboration in Figma and Google Docs. With Proof Coding, you specify the mathematical contract and let AI handle the implementation, while the properties serve as a machine-verifiable guarantee of correctness.

This principle isn't new. RSA encryption doesn't rely on someone calling the recipient to confirm data arrived untampered - the machine can mathematically verify that the data is end-to-end encrypted and intact. Proof Coding extends this: define mathematical requirements, and let AI produce implementations that are self-verifying against those requirements.

The pattern of building on formally verified logic shows up everywhere - streaming protocols, blockchains, end-to-end encryption, and so on. In each case, the mathematical properties are durable even as implementations get rewritten.

As AI improves, it will push experts to think in terms of the constraints governing their software rather than the exact implementation.

From Library Properties to Application Properties

Nobody is implementing a CRDT from scratch for their app. They're pulling in a library. But the library having correct properties doesn't mean your application does. A CRDT library can guarantee that merge(A, A) = A, but what a user actually cares about is: if they create a new account and the system finds existing settings, do those merge into a working account? Or do they hit a state bug that requires clearing cookies or reinstalling the app?

This is where Proof Coding gets practical. The real opportunity is carrying mathematical properties through into the application itself. A library's concern is algebraic: merging with the empty set returns the original. An application's concern is experiential: a user's data is never silently lost, conflicting edits resolve predictably, and no sequence of normal actions puts the system into an unrecoverable state. AI is uniquely suited to tracing those guarantees through layers of abstraction that would be tedious and error-prone for a human to verify by hand.

Who Validates the Validator?

To be clear: manual testing and line-by-line code review remain the gold standard for software correctness. Self-verifying code is a practical supplement, not a replacement - and it cannot protect against extreme cases like runtime attacks that alter the meaning of the code itself. But for the vast majority of correctness concerns, formal properties dramatically reduce the surface area an expert needs to review.

Mitchell and Shaaban's research on "Vibe Reasoning" identifies a structural issue worth understanding: LLMs prioritize user commands over code consistency, leading to what they call "constraint-reconciliation decay." As a codebase grows through iterative prompting, the AI loses track of accumulating design constraints and introduces contradictions the developer can't easily spot. Their prototype sidecar system, integrated with Claude Code, was able to automatically flag and fix these kinds of bugs - evidence that AI-assisted verification tooling is viable, but also that unverified Vibe Coding accumulates hidden risk with each iteration.

It's fair to question whether AI is ready to directly write mission-critical systems. However, can AI play a helpful role in supporting that effort - by helping write proofs and verifications? As Kleppmann points out, writing proofs is actually one of the best applications for LLMs - it doesn't matter if they hallucinate, because the proof checker rejects any invalid proof and forces the AI to retry. This is fundamentally different from code generation, where hallucinations silently ship to production. The expert's job shifts from verifying the code to verifying the proofs, which can have orders of magnitude fewer touchpoints.

The key asymmetry between the engineer and the AI is accountability. When humans work together, one person implements and the other validates - and these roles are regularly swapped. With AI, there is no real-world accountability. Whoever trained the model will not refund Amazon's losses from an AI-caused outage. Until a new paradigm emerges, humans must fill the validator role, and optionally the implementor role - not the other way around.

Why Can't AI Write the Properties Itself?

AI can and should propose properties that attempt to capture business requirements. But until the alignment problem is resolved, formal properties serve as a readily-interpretable backstop. Formal logic properties are a layer of intent that cannot be bypassed regardless of how capable the model becomes. AI labs are working on training models to resist tampering, but none today would condone giving an AI unchecked access to critical systems. (Whether such access can ever be made safe is an open question.)

With Proof Coding, AI is making formal specification practical for the first time. Due to the sheer cost, rigorous correctness proofs were historically reserved for avionics, medical devices, and nuclear systems. AI has compressed that cost dramatically, opening the door for formal approaches in ordinary software.

The Economics

Up to a certain threshold of quality, safety, and security, Vibe Coding is pushing the cost of software toward zero. This is genuinely exciting - it democratizes creation and accelerates iteration in ways we've never seen.

However, as Osmani argues, this creates a new bottleneck: verification, not generation, becomes the rate-limiting step. AI can generate code 10x faster, but if a human spends the same amount of time reviewing and fixing it, the net gain is marginal. This is Amdahl's Law applied to software: speeding up generation doesn't help if verification remains the dominant cost.

Vibe Coding will likely expand the market with useful software that covers a broader base of unique and exciting use cases. This is a good thing - more useful software in turn expands the need for secure, reliable, and safe foundations underneath. For example, payment processing that is securely integrated. Powerful personal agents that run in secure execution enviornments. Having one without the other can be zero or negative value. Accordingly, Vibe Coding and Proof Coding are complementary - the more one grows, the more the other can grow with it.

Hiring a Proof Coder

If Proof Coding resonates, the natural question is: how do you hire for it?

Include a rigorous technical interviewer who does not outsource to AI. You don't want AI backstopping a hiring process for a role that's meant to backstop your AI.

Include system integrity questions. For coding portions of the interview using AI, consider limiting the candidate’s access to less capable model that won't one-shot the problem. This allows the interviewer to see how the job candidate detects and corrects broken or insecure logic generated by the AI. (Even if you design a difficult problem, it’s hard to predict whether the AI will make mistakes with frontier models.)

Assign a problem using AI to create software with self-verified properties. Give candidates access to a frontier model for this. The deliverable isn't just working code - it's code with formally defined invariants and verification that those invariants hold. The candidate should be able to describe why those invariants matter and how they are verified.

Assess understanding of algorithmic properties and how to verify them. Can the candidate articulate what makes a merge operation commutative? Can they explain why idempotency matters for retry logic? This is the core competency.

Some will argue that familiarity with AI tools is sufficient and that deep technical questions are unnecessary - after all, the candidate can just ask AI, right? For an engineer tasked with system integrity, there's a clear reason these questions matter: this is the person validating AI's work on the highest-risk parts of your system. If you face pushback from peers on asking these questions, it's worth disambiguating the role you're hiring for. Not every engineer on the team needs this profile. But there should be a Proof Coder somewhere on the team reviewing work for the critical, high-risk portions of your product and infrastructure.

Open Questions

The need for experts to hand-write CSS, HTML, or even SQL is fading. Hand-optimized algorithms may have their days numbered. Yet experts continue to be invaluable pillars in the overarching technical integrity of your products, systems, and organization. AI is raising the quality ceiling, and Proof Coding is one modality for defining mathematical contracts that ensure correctness, prevent race conditions, and maintain security compliance.

Questions I continue to think about:

- Is Proof Coding practical beyond infrastructure and protocols?

- Can formal specification scale to messy, human-facing products where the requirements themselves are ambiguous?

- Is the validator/implementor split I've described a durable paradigm, or a transitional one that AI accountability models will eventually make obsolete?

I don't if anyone has good answers to these yet. However, the paradigms we embrace as we collaborate with AI will have ramifications for speed and quality of software we create. My hope is we can identify and apply effective ways for how humans and AI work together to efficiently create useful and trustworthy products.

Further Reading

Addy Osmani, "The Trust, But Verify Pattern for AI-Assisted Engineering" - Makes the case that verification, not generation, is the new development bottleneck, and lays out practical guardrails for teams using AI coding tools.

Bayazıt, Li & Si, "A Case Study on the Effectiveness of LLMs in Verification with Proof Assistants" (2025) - Evaluates how well LLMs can generate formal proofs using proof assistants like Rocq, finding they perform well on small proofs and can produce concise, creative verification strategies.

"Position: Vibe Coding Needs Vibe Reasoning: Improving Vibe Coding with Formal Verification" (2025) - Argues that Vibe Coding's pitfalls (technical debt, security issues, code churn) stem from LLMs' inability to reconcile accumulating constraints, and proposes a formal methods sidecar system to make Vibe Coding more reliable.

Martin Kleppmann, "Prediction: AI Will Make Formal Verification Go Mainstream" (2025) - The author of Designing Data-Intensive Applications argues that AI is about to collapse the cost of formal verification. Writing proofs is one of the best applications for LLMs because hallucinations don't matter — the proof checker rejects invalid proofs. The challenge shifts to correctly defining specifications.

Ready to boost your productivity?

Try SecondBrain and experience the power of AI-driven productivity tools.